/ Topics / Hz System Overview

Authoring Musical Notes, Soundscaping, Live Coding

Audio IO, MIDI IO, Open Sound Control

Asset-based, Nonlinear Workflow

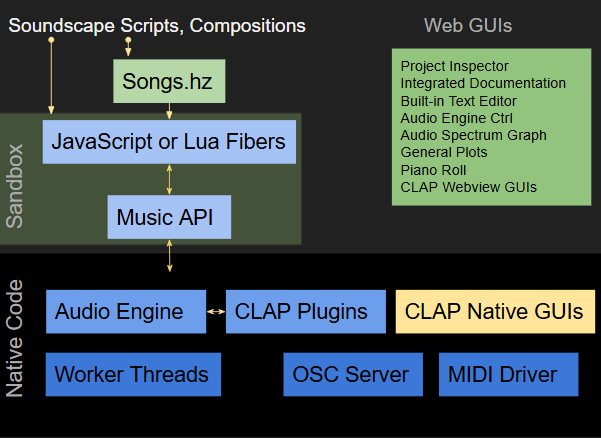

Hz is a high-performance digital audio synthesis engine coupled with a modern and portable GUI system built from web technologies (webview). Hz is controlled by both GUI operations as well as by user-provided scripts written in either JavaScript or Lua.

Users author soundscapes or musical compositions through a combination of Songs.hz sheets and control scripts. Control scripts employ the Music API, to instantiate CLAP plugin and Hz-builtin nodes into an audio graph. Next, sounds are triggered by delivering sample-accurate events (MIDI, OSC, programatic notes and parameter changes) to the nodes in the graph. Events cause nodes to alter their audio signal processing and changes propogate through the audio graph ultimately producing the "mix" delivered to your audio output device.

Because Hz nodes are CLAP plugins the audio capabilities of the engine are virtually unlimited. You can author your own plugins or procure them from commercial or freeware purveyors. See Resources for more details.

Hz ships with a basic set of plugins so you can get going immediately. See Getting Started to take the first baby steps with Hz.

Hz Scripting and Native Components

As seen above, Hz is divided into native and scripting components. Scripting components are further divided into two categories: Sandbox and Web GUIs. All of the Web GUIs are written atop the webview engine and available for web-dev inspection. Your custom scripts run in separate JavaScript (or Lua) sandboxes to ensure clean, reproducible execution contexts for them. JavaScripts running in a sandbox window can opt to update this sandbox window contents as shown by the Tower of Hanoi example. Manipulating the webview DOM is widely understood and well documented online, so providing details on the topic is beyond the scope of this Hz documentation. The same holds true for other web technologies like Web Workers, JavaScript Async Promises and so on.

Scripts communicate with native code via a variety of APIs and event notification mechanisms. For example, the Audio Monitor receives updates from the running audio engine at a 60 Hz refresh rate. Similarly, your control scripts utilize the Music API to update the audio graph, schedule notes and modify note expression parameters or to synchronize with other asynchronous tasks.

The manner in which you author your creations is a personal choice that may change with your project. This choice can be informed by a discussion on notes and events found here.

As with most programable systems you are limited only by your imagination and programming skilz.

Our Examples may also help stimulate your thinking on this topic.

Native GUIs

Most CLAP plugins build their user interfaces using platform specific APIs that populate a private, stand-alone window provided by Hz for their purposes. Your scripts can Show or Hide these windows and the Windows Subpanel also supports visibility toggling. Hz exposes the potential for plugin webview-based GUIs and uses the experimental API for its built-in CLAP plugins. The advantage in the context of Hz is that the diplays reside within the Hz window as draggable, sizeable tabs within its Flexible Layout system. Moreover, no platform-specific GUI code is required.

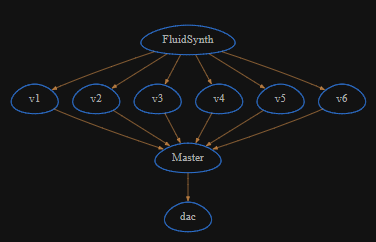

The Audio Graph

The audio engine processes multiple frames (a chunk) of audio sample data in the order expresssed by your the audio graph. Graphs are constrained to be directed and acyclic (ie they must be a DAG). To accomplish common audio effects like feedback, delay and echo, special graph transformations may be performed to remediate cycles. Because we operate on chunks of samples, Hz is able to leverage your computer's SIMD/Vector Processing capabilities to further accelerate signal processing.

The results of a node calculation are passed to downstream nodes and generally wend their way to the DAC (digital-to-analog) node representing your audio output device. The graph representation is powerful in that it compactly exposes potential parallel processing oppportunities to the audio engine and concisely represents all the data flow in an audio session. The graph can be efficiently updated on the fly by your control scripts using the Music API. This means you can achieve computational efficiencies as the complexity of your creations change. This is often reflected in live voice counts and load average as displayed by the AudioStatus Panel.

Node Events

Not explicit in the audio graph display is the event-flow requested by your control scripts. Events are delivered aynchronously to graph nodes prior to each audio sample chunk processing. They are tagged with a timestamp (either ASAP or a future time) to describe correct ordering. In order to achieve sample-accurate event scheduling the chunk size of a computational unit may be modified to align with event request timing. The CLAP plugin API characterizes the event handling machinery and requires that adhering plugin instances (aka nodes) react to sub-chunk event requests accordingly. Events fall into two categories: plugin parameter value edits and standard note on/off/expression.

Audio and Modulator Nodes

Most nodes present in the audio graph are Anodes. These process audio signals from 0 or more input ports to produce audio signals on 1 or more output ports.

Hz introduces a new class of audio graph node, Modulators.

These deliver periodic parameter events to targeted graph node parameters

and thus are wired into the audio graph slightly differently from audio nodes.

Hz includes a builtin collection of Modulators and you can use them

to express parameter-changes via the audio graph. Note that your scripts

can also make parameter changes, so this ability is offered as an

alternate means to express parameter modulation. Parameter changes

are necessarily lower frequency than audio-rate and that makes the line

between LFO audio nodes and Modulator nodes a little fuzzy. One clear

distinction, however, is that Modulator nodes only change parameter

values while an audio LFO node only audio signals. Both are handy to

have in your toolbox. See here

for more details.

Native Threads

To achieve high-performance audio processing Hz leverages the multi-core and multi-thread resources of your computer and its operating system. Here we describe the roles of various important threads within a single instance of Hz. Understanding threads is NOT required to create soundscapes with Hz. Understanding fibers is a separate topic and a feature of the MusicAPI that allows you to create, control and synchronize independent user-level scripts. For this reason understanding fibers MAY be a topic worthy of your attention.

And yet curious readers may wonder about the thread situation and we hope this section on threads reduces any temptations toward conspiratorial thinking.

Main Thread

is the original thread provided to Hz when it starts up.

Event Loop Thread

is the thread in which non-audio events are triggered. Some OSes equate the Main and Event-Loop thread but it may still be useful to think of these roles as separate.

GUI Thread

is optional and not present when running in "headless" mode. When present the Event Loop Thread and the Gui Thread are synonymous. This thread is also the primary contact point for communicating with with JavaScript and the webview engine. Note that running headless is only available to Lua-scripts since the JavasScript interpretter requires a GUI thread.

Audio Thread

is created and deleted each time you start and stop the Audio Engine. This is the thread in which your CLAP plugins perform their primary audio computations as described by the audio graph. CLAP plugins require that some operations be performed in the Main thread and others (mostly the processing) in the Audio Thread.

Lua Thread

is created to manage the Lua interpretter context. Lua scripts are performed in this thread and Music API requests are transferred between here any other threads before returning results back to the Lua interpretter.

Open Sound Control (OSC) Thread

is created to listen for and asynchronously deliver OSC events.

MIDI Thread

the creation of this thread depends upon the OS. On Linux this thread is employed to service and deliver MIDI event traffic.

Worker Threads

are used to to support asynchronous file IO for the audio and gui threads. Hz exposes a custom CLAP interface to plugins that allows them to request asynchronous work, including Fast Fourier Transforms and receive their results. The Sample Manager uses t his to pre-load samples for Hz.Samplo. Currently worker threads are used by Hz.plugins and Hz itself.

Tasks and Task Pools

Hz relies on the abstraction of tasks and task pools to perform most

program state-change operations. There are a large number of Hz task

subclasses and these are created when a state-change is requested.

Some tasks are so common that we pre-allocate them into a resuseable

pool in order to improve the performance of the memory allocation

subsystems. Generally tasks originate in one thread and are performed

in one or more other threads. Threads often deliver values to their

point of origin upon completion.

Hz utilizes efficient lock-free, thread-safe queues to achieve maximal multi-threaded performance.

Stats

You can request Hz to dump performance-related statistics and the end of a session. Stats include the audio configuration, session runtime and memory usage, the nodecount histogram, per-plugin timing details and task-type counts.

% hz --stats -v

produced this output:

Hz:

audio:

config:

api: ds

samplerate: 48000

input dev: 132

input channels: 2

output dev: 129

output channels: 2

nbuffers: 8

abuf count: 4

abuf memory: 4096

processing:

frames: 6906368

nodecounts:

range: [0, 999]

hist:

- 53952 # [0 -> 9)

- 0 # [9 -> 19)

nodes:

graph rebuilds: 1

plugins:

builtin.adc:

instances: 1

builtin.blackhole:

instances: 1

builtin.dac:

instances: 1

org.fluidsynth:

instances: 1

parameters: 44

processtime: 8.28327

scantime: 9.06e-05

process time: 9.48836

run time: 144.208

system:

platform: win32

runtime:

real: 164.26

user: 4.11

kernel: 1.16

memusage (MB): 20.98

memdetails:

PageFaultCount: 139390

PeakWorkingSetSize: 252534784

WorkingSetSize: 66678784

QuotaPeakPagedPoolUsage: 581688

QuotaPagedPoolUsage: 561576

QuotaPeakNonPagedPoolUsage: 5901360

QuotaNonPagedPoolUsage: 28152

PagefileUsage: 149127168

PeakPagefileUsage: 341516288

m debug Application Shutdown End

? debug Tasks created/destroyed 6504/6504

? debug name | exec | pool |

? debug activateVoice | 0 | 1800 |

? debug asyncWatcher | 0 | 9 |

? debug audioControl | 1 | 1 |

? debug audioStatusChange | 2 | 2 |

? debug audioSummary | 6744 | 17 |

? debug cmdInit | 0 | 1 |

? debug cmdQuit | 0 | 2 |

? debug coUpdate | 0 | 600 |

? debug fsEvent | 0 | 12 |

? debug graphUpdate | 2 | 1 |

? debug log | 199 | 199 |

? debug noteDone | 0 | 128 |

? debug noteEvent | 2086 | 1800 |

? debug noteExpression | 2 | 1200 |

? debug plot | 0 | 16 |

? debug pluginAsyncTask | 0 | 128 |

? debug pluginGUIUpdate | 0 | 128 |

? debug registerNode | 5 | 4 |

? debug wakeup | 110 | 200 |