/ Reference / Songs.hz

Songs.hz . Music API

General MIDI . MIDI CC . MIDI notes

Hz Webview Plugin GUI

Hz CLI

Songs.hzis an experiment in music-design ergonomics.It's a musical notation that is easy on the eyes and makes simple the task of describing a multipart song to a digital audio environment with arbitrary plugins and plugin connections.

Songs.hzoffers special notation to sequence, repeat and randomize musical ideas. It is designed to express multitrack compositions and allows tracks to share common properties like tempo and volume or to operate autonomously from other tracks.Files authored in

Songs.hzformat can be edited, visualized and performed by Hz directly. They can also be converted to MIDI, abc or other representations.

The purpose of this page is to provide reference information for

Songs.hz authors. But as with most things, Songs.hz is

best learned by example.

Here is a teaser:

Mr Reich

songs_hz:0.1.0

Song(Id:"Mr Reich")

{

Tempo = 140

phrase = [e4|pan f#4 b4 c#5 d5 f#4 e4 c#5 b4 f#4 d5 c#5]!25

Track(Id:"phase1", Mute:0)

{

MidiChannel = 1

pan = (pa:<?0,.25>) // random left position

$[phrase]

$[phrase]

}

Track(Id:"phase2", Mute:0)

{

MidiChannel = 2

Tempo *= .99

pan = (pa:<?.75,1>) // random right position

$[phrase]

Transpose = -12

MidiProgram = "cello"

$[phrase]

}

}

Format Overview

A songs file begins with the keyword songs_hz and a version number.

The songs file should contain at least one Song block and can collect

a number of songs into a single file.

songs_hz:0.1.0

Song(Id:"Sun", ...)

{

// song description

}

Song(Id:"Moon", ...) {} ...

Song, Block, Section, Statement

A Song is made up of Voice, Track and Handler blocks. Blocks are a collection of statements and represent independent scopes for Variables.

Block statements allow you to assign values to variables, to define event sequences and to invoke built-in functions. Musical events are nested within measures. A block can be further subdivided using Section blocks.

Variables are divided into two categories, system and private. As a rule System variables are capitalized and private variables are not. Assignments affect only statements in their block or sublocks. This means you can assign different values to the same variable in different blocks.

Song Skeleton

Song(plist)

{

Pan = .5 // system variable assiged in song-scope

Sequencer = [Intro, Chorus!2] // optional sequencer assignment

Handler() {...} // optional signal handler(s)

Voice(Id:"v1") // optional Voice(s), referenced by Tracks

{

AnodeInit = "Vital" // or tuple of all anodes used.

Pan = .3 // override song value for users of this voice

}

Voice(Id:"v2") {...}

Track(Id:"melody") // required Track(s)

{

VoiceRef = "v1"

Section(Id:"Intro")

{

[a3 b3 c3 d3] // a measure of 4 notes

}

Section(Id:"Chorus")

{

}

}

Track(Id:"perc")

{

VoiceRef = "v2"

Pan = .7 // override voice or song value for this track

...

}

}

Performance

To perform a song we play all blocks "in parallel". In other words all tracks play together.

Within a block, events are triggered in order and with the timing defined by block tempo as well as by the event position in a measure plus optional event-timing values. Usually, the tempo is shared across all tracks, but as you can see in Mr Reich, you can choose a tempo for each track to obtain interesting phasing effects.

Track

A Track is where song events (notes, chords and sounds) reside. Events are represented by an arbitrary name (identifier) and so can have variable values. The value of an event can be assigned within the track scope or inherited from voice or global or systemwide scopes.

Track blocks can be authored in chunks and combined when loaded.

This is done by defining a new track with a previously defined Id.

Contents of such tracks are appended to the first track with

that Id. This way you can interleave Tracks, for example,

on a section-by-section basis making it easier to keep different

tracks mutually consistent.

Section

A song block can be divided into named chunks using Section Blocks

A Section's Id is purely informative unless a Sequencer

is active. When a song is performed with active sequencing

you must include an Id for each section and design them knowing

that they will be performed in a potentially arbitrary order. This

amounts to ensuring that variables are initialized in sections that

refer to them.

Section blocks are not independent subscopes of their track and cannot be further nested. When developing a song, Sections can be used to audition individual sections or experiment with the movement from one section to another. This is done by modifying the Sequencer statement as we now explore.

Sequencer

When present, Sequencer describes the ordering and

repetition of Sections in performance.

Sequencer is a system variable and should usually be assigned

a value near the top of your Song block and only once. The default

value for Sequencer is Nil and this means that all

tracks will be performed from top to bottom, disregarding

any Sections defined within the tracks.

When non-Nil, Sequencer must describe an ordering of song

Sections. Due to the fact that the Sequencer describes only

untimed, unarticulated, non-chordal ordering of song sections,

the sequencing notation is a subset of that used to

express note and measure timing. Specifically, measure

nesting and repeat (!) operations are supported, but

articulations and stretch operations are not.

Here's a simple example:

Sequencer = [Intro [Verse!3 Chorus]!3 Ending]

Sequencer also supports a special value to notify the performance environment that real-time sequencing selection will be used.

Sequencer = "Live"

In that case, the question of performance-completion becomes

relevant. A Handler that invokes the SetSequencer can

request performance termination by passing it a Nil sequence value.

Moving through sections referenced by Sequencer produces instantaneous jumps in the performance of each track. If a Section is not present within a given track, no jump will occur and the track will proceed in its usual linear ordering. For example, it's common for Voice tracks to have no section-specific behavior.

The sole function of Sequencer is to describe section ordering.

Each section can have its own performance settings and these can

differ between tracks. It's common to require settings that are

section-specific but song-wide. Tempo and Meter are prime

examples of this (for non-Reichian songs).

In this example, we create a special track whose scope is Global

to achieve this. Keep in mind that tracks are evaluated in the

order encountered in the song so shared behavior should be

expressed by the "earlier" track(s).

Song(Id:"I have a sequencer!")

{

Sequencer = [Intro [Verse!3 Chorus]!3 Ending]

Track(Id:"sharedTiming", Scope:"Global")

{

// This track has no events, makes no sounds.

// Its purpose is to establish tempo changes across the song.

Section(Id:"Intro")

{

Tempo = 120

}

Section(Id:"Verse")

{

Tempo = 150

}

Section(Id:"Chorus")

{

Tempo = 120

}

Section(Id: "Ending")

{

Accel = -.1

}

}

Track(Id:"melody")

{

Section(Id:"Intro")

{

... measures here ...

}

Section(Id:"Verse")

{

... measures here ...

}

Section(Id:"Chorus")

{

... measures here ...

}

Section(Id:"Ending")

{

... measures here ...

}

}

Track(Id:"bass") {...}

Track(Id:"perc") {...}

}

Voice

The Voice block allows you to specify parameters and context

for its instrument(s). Voices are referred to using their Id.

This is done by assigning the System variable, VoiceRef

as shown in this snippet:

Voice(Id:"lead")

{

AnodeInit = "Vital"

Pan = .25

}

Track(Id:"melody")

{

VoiceRef = "lead"

Scale = "C3 major"

[0 1 2 3]

VoiceRef = "anothervoice"

[0 1 2 3]

}

This design makes it possible for a track to switch voices as its performance proceeds.

Like all Songbook blocks, Voice is a scope for variables and is

responsible for converting names to concrete values. This symbol

lookup is peformed relative to the referencing track so that

tracks can override the interpretation of an indentifier as its

events are performed. One use for this feature is to change the

key of a event sequence between measures or sections.

Voice blocks should request the performance environment to instantiate

one or more audio nodes through a single AnodeInit request.

AnodeInit can be the (string) name that describes the audio node to the

performance environment. For example, "FluidSynth", "Vital" and

"Surge XT" are the names of CLAP plugins known to Hz. More

complex AnodeInit scenarios are possible by nesting data within <>.

AnodeInit Examples |

Description |

|---|---|

"FluidSynth" |

A single anode for this voice, default configuration. |

<"FluidSynth", "soundfont/myfont.sf2"> |

A single anode with an initial state. |

<<"Vital">, <"Surge XT">> |

Two anodes with default implied. |

<<"Vital", "presets/bells.vital">,<"Surge XT", "presets/drums.surge">> |

Two anodes with an initial state for each. |

<"Hz", "preset.js", (name:"v2")> |

The Hz runtime supports procedural (javascript) voice configuration allowing for arbitrary per-voice node graph construction. Here, Hz executes the function, InitVoice, defined in preset.js. |

To select between multiple anodes, the Anode keyword can be set to the

index into the AnodeInit tuple. The default value of 0 means that

it need only be manipulated in the multiple anode case.

Keep in mind: it's up to the performance environment to interpret

the AnodeInit requests and also to define voicing when AnodeInit isn't

provided. In that case the only information available is the voice

Id suggesting the potential value of conforming to a convention like

General MIDI when selecting voice names.

Voice blocks can modify voicing of track events by providing values for voice parameters like VelocityRange or Pan. These values can be modified between time measures or sections and may be further modified by evaluating each event's articulation. Note that if you have a shared-timing track it should precede Voices that require correct timing for their proper articulation.

Voice(Id:"piano")

{

AnodeInit = "FluidSynth"

MidiProgram = 1

loud = <.8, 1>

moderate = <.5, .6>

quiet = <.2, .4>

Section(Id:"A")

{

VelocityRange = loud

|z|!10 // 10 measures of silence

VelocityRange = quiet

}

Section(Id:"B")

{

VelocityRange = moderate

}

}

Voice(Id:"leadsynth")

{

AnodeInit = <"Vital", "presets/modularBells.vital">

o1pan = "Oscillator 1 Pan" // one of many Vital parameters

o1panLeft = (o1pan:0)

o1panMid = (o1pan:.5)

o1panRight = (o1pan:1)

}

Voice(Id:"drum")

{

AnodeInit = "FluidSynth"

MidiBank = 10

sd = 45

}

Track(Id:"t1")

{

VoiceRef = "drum"

...

}

Track(Id:"t2")

{

VoiceRef = "leadsynth"

[a3|o1panLeft]

}

When no voices are present in the song, the behavior depends upon the performance environment.

Block Structure

Now, let's delve more deeply into the structure and syntax available to song blocks.

Blocks are comprised of comments, assignments and events.

Events are grouped into measure-groups, measures and submeasures

and bounded by [].

{

// Here is a block comment

a = 3 // here is an assignment

[a b [c d] [c d]] // here is a measure with submeasures

}

Assignment statements assign values to variables that may affect the performance of events that follow its assignment. Assignment statements trigger the implicit creation of a new measure-group. Some variables are system variables and by convention these are represented by uppercase names.

Meter = <4, 4>

Tempo = 120

Scale = "C4 major"

[0 1 2 3] // measure group 1

[0 1 2 3]

Scale = "C4 minor"

[0 1 2 3] // measure group 2

[0 1 2 3]

All blocks except Sections represent a scope for assignment. This means that separate tracks can manage their own performance. The Song block represent the outer (global) scope for variables and can be used to express shared behavior.

Measure

A block section is divided into Measures. In common music notation, measures ensure that musicians are on the same page. In songbook we can synchronize track events and eg scrub to a particular time in the song only if the tempo is shared across tracks. Of course we can employ Section to achieve this at a more granular level.

A measure's temporal duration is defined by the current (scoped)

value of Tempo reduced to seconds per measure (SPM).

To perform a block we simply iterate over its measures. Due to the fact that measures can be repeated or stretched, we can't assume that the i-th measure performed is the i-th measure in a section. That said, we can pre-flatten the repeats and now refer to measures with an index.

Events

Events within measures represent the flow of time from left-to-right,

top-to-bottom. Unless stretched, outer-measures share the same duration

as defined by the current Tempo. Events are described by a number,

tuple or variable name. The interpretation of event values depends

on the current voice and other musical state. Musical voices are

either tonal or percussive and it's up to the instrument associated

with the block's current voice to interpret the event once converted

to a numeric representation.

For example, numeric events represent notes relative to the current

Scale or as absolute MIDI note numbers. Variable events represent

sounds symbolically and can be defined to suit your own needs. When

the songbook performer encounters events with undefined values, it

attempts to interpret them as musical notes. Specifically, events

of the form [a-g][#b]Octave are interpretted in the obvious way.

Similarly General MIDI provides standard

names for a range of percussive sounds and program names.

| Event Identifiers | Notes |

|---|---|

[ab4 bb4 c4 db4] |

clear meaning for tonal instruments |

[a b c d] |

no clear meaning, we need an octave |

[sd bd z hh] |

clear meaning, for percussion instruments |

As we've already stated, you can assign your own meaning to any indentifier. Just make sure to assign it a value in the appropriate scope.

Notes

By default, musical notes are expressed in a form like this:

c#7. A rest is expressed:z. Notice that the MIDI octave is included in this notation and follows Scientific Pitch Notation (SPN) wherein octaves range from -1 to 9, Middle-C is expressedc4anda4is 440 Hz.

Broadly speaking, events ultimately evaluate to absolute SPN note numbers.

Such numbers are interpretted by a synthesizer and can produce tonal

or percussive (ie not note-like) sounds. Thus, the following

measure makes perfect sense: [60 62 64 66].

When notes are authored directly as numbers and not their symbols,

it is often convenient to think of the numbers in relative terms.

For example, it may be convenient to express an idea relative

to a musical scale. Now we can describe a run of notes in a

scale like so: [0 1 2 3]. If we change Scale, the same

measure will produce different tones. Note that between measures

you can change the value of Scale allowing you to change number

interpretations through a song.

We employ the current value of

Scaleas a guide on how to convert authored numbers into SPN numbers. If you author SPN notes directly you should setScaletoNil. This is the default value ofScale.

Musical Scales

A scale is requested by assigning a string value to the Scale identifier and there are many to choose from.

| Expression | Description |

|---|---|

Scale = "C3 minor" |

Sets key, origin and quality |

Scale = "C minor" |

Sets key and quality, no origin |

Scale = "minor" |

Sets quality, no origin, no key |

Scale = Nil |

Sets the null scale (numbers are unmapped) |

Note that there are 2 optional characteristics of a Scale, its origin and its key. The required characteristic of a scale, its quality, determines the subset of notes within an octave that are members of the scale.

Lets look at how numeric remapping applies in a range of circumstances.

| Scale | Input | Remapped | Discussion |

|---|---|---|---|

Nil |

[c4 d4 e4] |

[60 62 64] |

No remapping |

"D minor" |

[c4 d4 e4] |

[60 62 64] |

No remapping, expressed concretely |

Nil |

[0 1 2] |

[0 1 2] |

No remapping, no scale |

"minor" |

[0 1 2] |

[0 2 3] |

Chromatic values from minor scale, 0 origin |

"D minor" |

[0 1 2] |

[2 4 5] |

Chromatic values from D minor scale, 0 origin |

"D3 minor" |

[0 1 2] |

[52 54 55] |

Chromatic values from D minor scale, 52 (D3) origin |

It should be noted that without an origin, small numbers will be remapped

to small numbers and these are likely to be in the subsonic range of

SPN notes. To bring such notes into the audible range you can set

offset them by setting Transpose as seen here:

Track()

{

Scale = "minor"

Transpose = 30

[0 2 4 6]!10

}

Sounds vs Notes

Event are often indentified indirectly via an identifier (variable name). Variables are scoped to the track or voice and this allows each voice to define the meaning of eg "c3" or "sd". In the case of tonal voices, c3 will usually be converted to a SPN value, 48, though alternate tuning systems are possible. In the case of percussive voices, the variable name can be converted to some arbitrary combination of bank and program/note. General MIDI defines a mapping between sounds and note numbers and these names are provided as defaults.

[hh sd bd hh]!8

Note that some sounds may have multiple variants characterized by their index.

In order to support randomization, it may be advised that most variables evaluate to numbers. This allows each instrument to interpret a number or convert sd0 to a combination of MIDI channel, bank and notenumber. A generic GM percussion implementation would select channel number 10 and somehow map sd0-5 to an assortment of note numbers which in turn are internal mapped to a sample file and replay rate.

Chords

Chords are a class of events consisting of multiple simultaneous events. Chords can be expressed in a few ways:

|-separated:[c3|e3|g3]or[0|2|4]- a tuple

[<c3,e3,g3>]or[<0,2,4>] - standard identifier

[Maj7] - custom identifier

[myFavoriteChord], where you assign any of these forms tomyFavoriteChordlikemyFavoriteChord = <c3,e3,g3>.

Chords can be expressed with pre-defined identifiers in the common form

Cmaj7_B"(with _ replacing '/') from standard notation. Keep in mind that identifiers can be optionally keyed and origined like so:Cmaj,C3maj,maj. Keyed chords must always use upper case for the key. Chords can also be expressed in Roman-Numberal notation like[I z IV V]but this notation requires that a key be provided via theScalekeyword.

As with note events we must interpret chords authored with numeric values. As with Scales, builtin Chords have three components, quality, key and origin. This can be a bit confusing.

Consider:

| Scale | Chord | Remapped | In Scale | Discussion |

|---|---|---|---|---|

Nil |

<c4,d4,e4> |

<60,62,64> |

n/a | No remapping |

D3 minor |

<c4,d4,e4> |

<60,62,64> |

N | No remapping, resulting chord isn't in scale. |

D3 minor |

<60,61,62> |

<error> |

Y | Large numbers remapped beyond audible range. |

D3 minor |

<0,1,2> |

<50,52,53> |

Y | Numbers remapped via scale origin and indices. |

minor |

<0,1,2> |

<0,2,3> |

Y | Numbers remapped via scale indices, no origin. |

D3 minor |

C4maj |

<60,64,67> |

N | Scale fully qualified, scale irrelevant. |

D3 minor |

IVm7 |

<55,58,62,64> |

N | Scale provides root and origin, notes outside scale. |

D3 minor |

Cmaj |

<48,52,55> |

N | Scale no origin, use C3 same octave as D3 |

D3 minor |

maj |

<50,52,54> |

N | Scale, use same octave as D3, notes outside scale. |

Nil |

Cmaj |

<0,4,7> |

n/a | No scale, no origin |

Nil |

IVm7 |

<error> |

n/a | Roman numeral chords always require a keyed scale. |

It's important to recognize that named chords always produce values that are not interpretted as scale indices, but rather chromatic indices.

Chord names are a little tricky but very useful. You can find the dictionary of aliases below.

Ranges

Ranges are sequences of events and are expressed via a special tuple

of the form <_a,b,s> (note the <_). Ranges are a compact form to

request linear note sequences and are commonly used to select a run of

notes from a scale.

Track()

{

// range combined with scale for arpeggios

Scale = "C2 major"

[[<_0,19,2>] [<_19,0,2>]]!2

}

Ordering and Timing Events

Meter, Tempo, Accel

Typically, Tracks share the notion of Meter, Tempo and Accel to facilitate their synchronization. These values can change instantly across a measure or between sections. The Tempo can also be changed gradually through the specification of non-zero

Accel. Finally, to achieve phasing effects, each Track can override the global Tempo.

Meter, SPM, Tempo, and Accel are reserved identifier names that can

be overridden by a track or controlled by the Sequencer.

The Meter of a track characterizes how to count beats. Following

Modern Staff Notation

the meter is represented as tuple fraction, like <4,4>, <3,8>.

The second number defines the size of a beat and is typically

one of 1, 2, 3, 4, 8, 16. The first defines the number of beats

in a measure.

The Tempo of a track characterizes the rate at which beats are played.

In Modern Staff Notation, Tempo is described by an Italian word or

by a count in beats/minute. Since there is no single uniform notation

for a beat in songbook notation, we interpret the combination of Meter

Tempo, and Accel to produce a value of SPM, or seconds per measure.

You can bypass this calculation by providing an explicit value for SPM.

The SPM time-interval is divided amongst events (and measures) according

to the event count and timing described below. In our physics jargon

below, SPM is the velocity at the start of a measure. When

Accel is non-zero, SPM is effectively changing through the peformance

of each event within the measure. When Accel is greater than 0 the

performance speed increases and when it's less than 0 it decreases.

The units of Accel are discussed below but useful values tend to be

in the magnitude of 0.01 - 0.2.

Event Timing: Implicit and Explicit

As mentioned earlier, songbook events reside within a measure. This determines the event onset and duration. Measures contain a space-separated list of events described as a number, an identifier, a tuple, or an inner measure. Identifier values can be defined or overridden within any block outside the scope of a measure. Identifier values are obtained by inspecting the track, then the voice, then the song.

Event timing is characterized by onset, time-spacing and duration. For this discussion we conflate time-spacing with duration. In order to achieve effects like staccatto or legato we require more nuanced articulation of duration as discussed later.

Events inherit their onset by dividing the containing measure by the number and duration of events found therein. So a measure is divided into four equal-spaced notes like so:

[a3 a3 a3 a3] // measure with 4 events

We can define fractional time-intervals implicitly by nesting measures within measures as seen here:

[[a3 a3] a3 a3 a3] // measure with submeasure consuming 1/4 of its parent.

We can interpret this in common time as two eighth notes followed by 3 quarter notes. You can take this even farther to represent grace notes, trills etc.

In addition, timing can be explicitly modified using this syntax:

| Explicit Timing Modifiers | Interpretation |

|---|---|

@_n_ |

stretch/squeeze event by n, n is number |

!_n_ |

repeat event n times, n is integer |

*_n_ |

squeeze and repeat event n, n is number or tuple |

Any event can include both one stretch and one of repeat or squeeze modifiers enabling the following:

[a3 b3 c3 d3]@3!4. This is interpreted as the measure of 4 notes stretched to a length 3 times its implicit duration and then repeated 4 times. Since we apply the optional stretch prior to the repeats it must also be expressed in this order making this a syntax error:[a3 b3 c3 d3]!4@3.

Time modifier numbers are represented as unsigned fractions: 4/3,

integers:2 or decimal numbers: 2.2. Fractions may be preferred over

decimal values since they can represent common durations like 1/3 exactly

and suffer no loss of precision when combined via multiplication and addition.

Note that generalized division is not implied by our use of fractions. Also note

note that * isn't a numeric multiplication operator.

When a tuple follows the * modifier, it is interpretted as a request for

euclidian timing with

two or three numbers representing hitsPerMeasure, slotsPerMeasure,

slotoffset (optional, default is 0).

Here are some examples of event with explicit and implicit timing.

| Example | Interpretation |

|---|---|

[a b c d] |

4 equal-duration events. |

[0 1 2 3] |

4 equal-duration events. |

[a b@2 c d] |

5 time units. b held twice as long. |

[[a b] c d] |

3 time units, a and b occupy 1/6 of total. |

[a!2 c d] |

4 time units, a is performed twice. |

[<c3,e3,g3>@3 d] |

4 time units, first three are a held C-major triad chord. |

[a3*4 d] |

2 time units, first is submeasure with 4 units. |

[a3*<3,8> d] |

2 time units, first is submeasure with 8 euclidian slots. |

[<_0,10>] |

range tuple produces 11 equal-duration event-numbers from 0 to 11. |

[[a@3 b] c c] |

broken rhythm |

This notation can also be used to articulate chord components.

Articulation

The term articulation is used to describe variations on the performance of an event. A note's loudness, its relative duration and other instrument-specific modifications like vibrato all fall under this umbrella.

Global Articulation

You control the default settings for some articulators simply by assigning to its System variable. As with all variables, these values are scoped to the block in which the asignment occurs. Thus, these settings can differ according to Track or Section. The can event be overridden on an event-by-event basis as described below.

| Name | Meaning | Value Range | Default |

|---|---|---|---|

Dur * |

Relative duration (for stacatto/legato) | (.01, 4+) | .95 |

Velocity * |

Controls volume and/or instrument effect | (0, 1) | .8 |

Velocity Range |

Maps velocity (0-1) to min-max | tuple | <.2, .8> |

Pan * |

Relative position in stereo field (depends on instrument) | (0 (left), 1(right)) | Nil |

*: accepts tuple for randomization

Event Articulation

Individual events can be articulated by optional comma-separated fields enclosed by parentheses that follow optional event-timing.

[a3!4(v:.5,d:1.2)]set note velocity and duration

Multiple articulations can be provided and these may represent modification of

global articulations within the voice. Articulations are processed

left-to-right so the last-most requests may shadow prior ones. Each

articulation takes the form key:value where the value can either

be a value or an identifier (variable).

Universal Articulators

These articulators are well defined and supported by all voices.

| Key | Meaning | Value Range |

|---|---|---|

v |

velocity | (0+, 1) |

d |

dur | (0+, ?), <1: staccato, >1: is legato |

Optional Articulators

These articulators are not universally supported. Their value is in the generic nature of the expression. Ie: they aren't tied to specific parameters of a particular voice/instrument. If you are willing to commit to a voice, ie your favorite synth, you might consider using custom voice articulators. To ensure that your songs can be performed in diverse voice configurations, these optional articulators are a good bet. They have support / meaning in both CLAP and pure-MIDI voice settings.

Some articulators can capture changing values over the lifetime of a single note. These articulors accept tuples or numbers. For example, a numeric tuning value may be useful for custom tuning schemes while a varying (tuple-range) pitch bend would be required to gradually change the pitch over the note. Keep in mind that ome of these articulators have global, persistent effect, especially in MIDI instruments. These may need to be reset after use.

| key | meaning | range | default | notes |

|---|---|---|---|---|

vo * |

volume | 0 - 4 | 1 | log scale |

pa * |

pan | 0 - 1 | .5 | clap is 0-1 |

tu * |

tuning/bend | -128,128 | 0 | clap: semitones, midi: 0-127 -> -2-2 |

vi * |

vibrato (depth) | 0 - 1 | 0 | combines with vr and vd |

vd |

vibrato delay | 0 - n | 0 | seconds |

vr |

vibrato rate | 0 - 1 | ||

ex * |

expression | 0 - 1 | ||

br * |

brightness | 0 - 1 | clap: float semitones, midi: 0-127 -> -2-2 | |

pr * |

pressure | 0 - 1 | clap: float semitones, midi: 0-127 -> -2-2 | |

cc{num} * |

MIDI cc | 0 - 1 | MIDI control message | |

mw * |

MIDI modwheel | 0 - 1 | MIDI modwheel | |

pw * |

MIDI pitchwheel | -1 - 1 | MIDI pitchwheel |

*: accepts tuple

| Examples | |

|---|---|

[a3!4(v:.5,d:1.2)] |

set note velocity and duration |

[a3!4(tu:<_0,-1>)] |

pitch bend each note one semitone down |

Voice Articulators

As described in the voices overview, arbitrary instrument parameters can be defined and then "performed" as events.

| key | meaning | value range | notes |

|---|---|---|---|

V_{param} |

custom voice parameter | number or number tuple | set a voice parameter value (anode-specific) |

V |

voice | voiceref string | used to change voices (uncommon) |

The first example below assumes that both foo and bar are mapped to

parameter names in the current voice (defined by VoiceRef).

In this example you can provide values for these parameters on

any event. If you wish to perform voice parameters defined within

the voice directly, just treat them like any event as shown in the

second example.

| Examples | |

|---|---|

[z(V_foo:3,V_bar:<_1,2>)] |

set voice parameter values |

[<c3,oscLeft>!3] |

perform voice parameter+value (oscLeft must be defined as plist) |

Handler

The Handler block allows you to integrate live performance into your songs. You can use it to practice jamming or enliven a performance of your songs.

A Handler listens to external live events within a SignalFamily

and triggers a response in the form of events, assignments or

function-calls in a song. Each time an event occurs, the entire

body of the handler is performed. When operating in the context

of a Sequencer you can use Sections

to modify the behavior of your handler. You can also have

multiple Handlers servicing the same signal family.

One use for Handlers is to request a new Sequencer

state. This allows you to select from different performances

of your song interactively. You may wish to set the initial Sequencer

to "Live" as described above. Here's an example that listens for

the keyboard keys "1", "2" or "3" and invokes SetSequencer

accordingly.

Handler(Id:"seqSelect", SignalFamily:"Hid")

{

HidFilter("KeyDown", "1", "2", "3")

mysequences = (1:[a b c], 2:[b]!3, 3:Nil)

SetSequencer(MidiKeyName, mysequences)

}

Handlers can invoke special functions to alter the performance state. These functions are divided into two categories: General and SignalFamily-specific. Here are the General functions:

| Functions for any handler | Description |

|---|---|

SetVar(var, block, key, plist) |

var is the name of a variable, block specifies a block name pattern, key selects a value from plist, value matching key is assigned to the named variable |

SetSequencer(key, plist) |

key selects a sequence from plist. Value must be a valid sequencer statement |

Here's an example to interactively mute or unmute tracks.

Handler(Id:"muter", SignalFamily:"Hid")

{

HidFilter("KeyDown", "1", "2")

options = (1:0, 2:1)

SetVar("Mute", "Melody*", HidKey, options)

}

Currently Midi and Hid signal families are supported.

Future versions may support OSC (Open Sound Control) or

Ableton Link.

Midi Handler

These special functions are available within MIDI handlers.

| Functions for Midi handlers | Description |

|---|---|

MidiPerform() |

Delivers all MIDI events to associated voice |

MidiFilter(midiCmd, ...) |

Events that don't match filter return from handler. |

These special variables are available within MIDI handlers.

MidiKey |

number (0-127) that represents the most-recent key. |

MidiKeyName |

name for MidiKey |

MidiChannel |

number (0-15) channel for last event |

MidiCmd |

name of last midi cmd |

MidiData1 |

number (0-127) MIDI data pkt 1 |

MidiData2 |

number (0-127) MIDI data pkt 2 |

The are the potential values for MidiCmd and MidiFilter():

| Value | Midi Pkt 0 |

|---|---|

KeyUp |

0x80 |

KeyDown |

0x90 |

KeyPressure |

0xa0 |

CC |

0xb0 |

ProgramChange |

0xc0 |

ChanPressure |

0xd0 |

PitchWheel |

0xe0 |

SysEx |

0xf0 |

Here's an example with two Midi event handlers.

Handler(Id:"midiEx", SignalFamily:"Midi", MidiDevice:"default")

{

VoiceRef = "MyMidiVoice"

// multisection

Section(Id:"a")

{

Transpose = 3

// MidiPerform function interprets Midi stream and delivers

// events via VoiceRef and its current Anode

MidiPerform()

}

Section(Id:"b")

{

// We can trigger events on each midi event.

// In this mode, we typically wish to filter out some

// of the MIDI traffic.

MidiFilter("KeyDown")

// if we make it here, a NoteOn occurred and MidiKey and MidiVelocity

// are valid. Each note triggered produces an arpeggio.

Transpose = MidiKey

a = [0 2 4 6]!4

}

}

Hid Handler

Hid stands for "Human Interface Device" and most computers have a least two of these - a keyboard and a mouse. A performance environment may support general Hid devices (like arduinos, joysticks, etc) or support no Hid devices at all. Hz version 1 supports keyboard and mouse events and generates these events only when the mouse/keyboard focus targets the sandbox window.

These special functions are available within HID handlers.

HidFilter(hidEvent, ...) |

events that don't match hidEvent return from handler. |

When HidFilter encounters tuple in the filter parameters it is interpretted

as a range for mouse coordinate normalization.

These special variables are available within Hid handlers.

HidEvent |

one of KeyDown, KeyUp, MouseDown, MouseUp, MouseMove |

HidKey |

when HidEvent indicates Key, holds the key name. |

HidMouseX |

when HidEvent indicates Mouse, holds normalized x coordinate. |

HidMouseY |

when HidEvent indicates Mouse, holds normalized y coordinate. |

HidMouseB1 |

when HidEvent indicates Mouse, holds button1 state. |

Here's a snippet that changes a custom parameter, Qmix, of myvoice.

Handler(SignalFamily:"Hid")

{

VoiceRef = "myvoice"

HidFilter("MouseMove")

vparam = (q:HidMouseX)

[vparam] // Change a custom voice parameter for every mouse position

}

Keywords, Functions, Types

System Variables

This table shows the built-in System Variables and their presumed value-types.

| System Variable | Type | Default | Description |

|---|---|---|---|

Meter |

2-tuple | <4, 4> | |

Tempo |

number | 120 | beats per minute (BPM) |

SPM |

number | none | seconds per measure |

Accel |

number | 0 | tempo acceleration |

Sequencer |

measure | "Live" | sets the song sequencer. |

SequenceIndex |

integer | 1 | current index of sequencer. |

VoiceRef |

string | Nil | name of current voice for the block |

AnodeInit |

tuple | Nil | defines Voice's Anode's and their inital states |

Anode |

integer | 0 | index into Voice's AnodeInit |

Scale |

string | Nil | name of tonal scale |

Transpose |

number | 0 | semitones to transpose notes. |

Velocity |

number,tuple | .8 | the default velocity value unless overriden by note-articulation. |

VelocityRange |

tuple | <.2, 1> | converts Velocity values between 0 and 1 into this range. |

Dur |

number | .95 | current duration articulation, multiplier for implied dur. |

Pan |

number | Nil | current pan articulation betwee 0 and 1 (.5 is center) |

MidiBank |

number | 1 | for MIDI instruments |

MidiProgram |

number | 1 | for MIDI instruments |

MidiChannel |

number | 1 | for MIDI instruments |

MidiKey |

number | Nil | available to Midi Handlers |

MidiVelocity |

number | Nil | available to Midi Handlers |

MidiCmd |

string | Nil | available to Midi Handlers (KeyDown, KeyUp, CC, …) |

MidiData1,2 |

number | Nil | available to Midi Handlers (associated with MidiCmd) |

HidEvent |

string | Nil | available to Hid Handlers (KeyDown, KeyUp, MouseDown, MouseUp, MouseMove) |

HidKey |

string | Nil | available to Hid Handlers ("a", "A", …) |

HidMouseX |

number | Nil | available to Hid Handlers (for Mouse events) |

HidMouseY |

number | Nil | available to Hid Handlers (for Mouse events) |

HidMouseB1 |

number | Nil | available to Hid Handlers (for Mouse events) |

DisplayColor |

string | Nil | display color control. |

DisplayOffset |

int | 0 | display row offset (for entire track) |

Functions

| System Function | Description |

|---|---|

Log(value, ...) |

logs a diagnostic message to the log panel |

| Handler Function | Description |

|---|---|

MidiPerform() |

directs live midi traffic to the Anode associated with VoiceRef |

MidiFilter("MidiCmd", ...) |

filters live midi traffic, an unfiltered event causes handler skip it. |

HidFilter("HidEvent", ...) |

filters live HID traffic, an unfiltered event causes handler skip it. |

SetSequencer(key, plist) |

searches plist for a sequence value associated with key |

SetVar(var, block, key, plist) |

sets the value of a variable in track specifies a track name pattern, var is the name of the variable to set, key selects a value from plist, value matching key is assigned to the named variable |

Reserved Tokens

Tokens are the keys in a parameter list. Here's a table of Reserved tokens.

| System Tokens | Type | Default | Description |

|---|---|---|---|

Id |

string or integer | "0" | Id field for blocks |

Mute |

number | 0 | Track mute |

Verbose |

number | 0 | Block verbosity |

Scope |

string | "Private" | Block scope (Global, Private) |

SignalFamily |

string | Nil | (eg "Midi") Handler constructor parameter |

Value Types

Here's a table showing examples of values. Typically these are placed on the right-hand side of assignment statements.

| Value | Description | Notes |

|---|---|---|

c4 |

identifier | |

3 |

integer number | |

3.5 |

decimal number | |

4/3 |

fractional number | |

"c major" |

string value | |

<1,2,3> |

tuple | Unqualified, list of Values |

<?1,5> |

choice tuple | Random number between 1 and 5 |

<_1,5,1> |

range tuple | 3rd value optional |

[a b c] |

measure event | Values within are events. |

Nil |

the empty value | |

(key:value, key:value, ...) |

parameter list | key:value |

(val1, val2, ...) |

parameter list | value list |

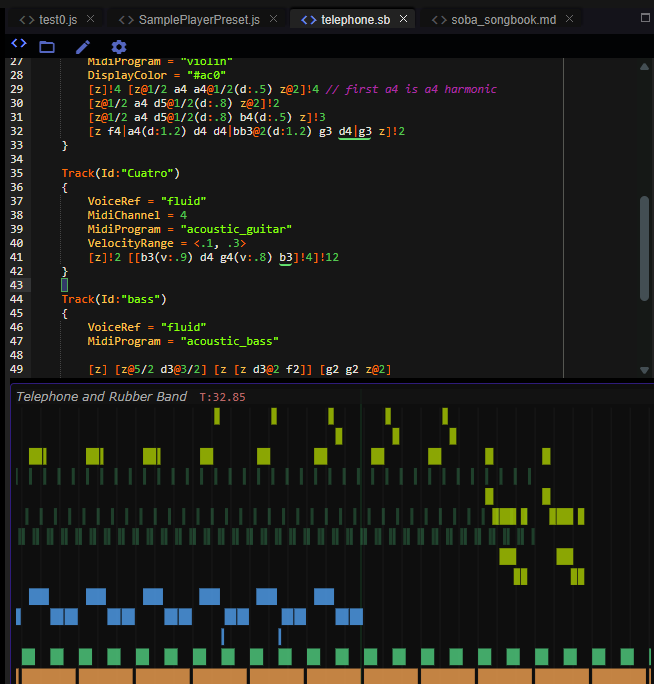

Developing Songbooks

To develop Songbooks you simply need to open your favorite text editor

and start typing. Many folks appreciate color hints in a text editing

experience and Songbook highlighters are available for ace editor and

highlight.js. Additional feedback can be provided in the form of

note visualization and even interactive playback. Hz offers both

of these capabilities as shown below and described elsewhere.

Performing Songbook files

Here's a code snippet for use in Hz's javascript sandbox. Note that this code is independent of the songbook contents so this driver can be used for any songbook.

// 1. load the Songbook file...

const thisDir = path.dirname(this.GetFilePath());

const testFile = path.join(thisDir, "books/test.hz");

let str = await FetchWSFile(testFile, false/*not binary*/);

// 2. instantiate a Songbook instance

let songbook = new Songbook(str);

// 3. perform the song via the Songbook method.

// yield control to the system.

for await (const v of songbook.Perform(this, thisDir/*presetdir*/, 0 /*songid*/))

yield;

Here's a more elaborate snippet that exposes some of the inner workings

of songbook.Perform.

// 1. load the Songbook file...

const thisDir = path.dirname(this.GetFilePath());

const testFile = path.join(thisDir, "books/test.hz");

let str = await FetchWSFile(testFile, false/*not binary*/);

// 2. instantiate a Songbook instance

let songbook = new Songbook(str);

// 3. select a song from the songbook

let song = songbook.FindSong(0);

// 4. ensure audio engine is running

let scene = await Ascene.BeginFiber(this);

// 5. establish correct voices by parsing anodeInitList and

// creating an anodeMap filled with instantiated anodes.

// Here, we'll just create a default voice ignoring anodeInitList.

let anodeMap = {};

let anodeInitList = song.GetAnodeInits();

let anode = scene.NewAnode("FluidSynth");

if(anode)

{

anodeMap.default = [anode];

scene.Chain(anode, scene.GetDAC());

}

// 6. Perform the song (sending notes to anodes).

// Song is performed in chunks between which we

// yield control to the system.

for await (const v of song.Perform(scene, anodeMap))

{

yield;

}

Appendices

The Physics of Musical Motion

In the case where tempo is constant we can pre-compute all measure

locations (in time), where MPS is measures per second && SPM

is its inverse.

measureStart = measureNum * SPM

Slightly more complex is the case where tempo is constant per measure.

measureStart = lastMeasureEnd + SPM

Note that this requires "integration" since the effects of tempo changes

depend upon prior tempo changes. In other words the value of lastMeasureEnd

isn't the result of a simple (non-iterative) formula.

The general case where tempo changes gradually (but with constant acceleration) follows the physics formula

t0 + v0 * dt + .5 * a * dt * dt

where a is the change of tempo and v0 is the original tempo. If we know the tempo at two points (measureStart and measureEnd), this becomes:

measureStart = measureStart + avg(SPM)*dmeasure

which applies the average tempo over a time interval. Again, this assumes constant acceleration.

Finally we consider the case where a time-stretch is in place via [a3] [a3]@3.

The [..]@3 indicates that the measure comprising a3 plays 3 times

longer/slower than it normally would. The question at hand is how to

interpret acceleration in this context. We can interpret time stretching

as an instantaneous change of SPM via SPM = SPM*3. Under 0 acceleration

and assuming SPM of 1, the first measure completes at t==1 and the second

measure complets at t==4. As it turns out (hands waving), under acceleration

we must not scale acceleration by the time stretch in order for measures

across time stretch changes to ensure continuity across such changes.

Music Dictionaries

Tonal.js has a variety of built-ins found here:

Anatomy of a DisplayColor

The DisplayColor builtin parameter can take a string color in a

variety of formats, following the WWW standard.

| Example | Description |

|---|---|

| "#333" | darkish gray in shortened form, each 3 can be [0-f] |

| "#303030" | darkish gray in standard form, each 30 can be [0-f] |

| "rgb(r,g,b)" | rgb values between 0 and 255 |

| "rgba(r,g,b,a)" | rgb as above, a is 0.0-1.0 |

| "hsl(h,s,l)" | hsl: h between 0-360, s and v like: 50%. |

| "darkorange" | www keywords: "lime", "teal", "gold", "olive", "pink", "plum", "steelblue", "tomato", "wheat" … |

Note Expressions on Two Planets

key |

CLAP expressions | |

|---|---|---|

vo |

VOLUME | 0 < x <= 4, plain = 20 * log(x) |

pa |

PAN | 0 left, 0.5 center, 1 right |

tu |

TUNING | semitones: from -120 to +120. |

vi |

VIBRATO | 0-1 |

ex |

EXPRESSION | 0-1 |

br |

BRIGHTNESS | 0-1 |

pr |

PRESSURE | 0-1 |

key |

MIDI expressions | |

|---|---|---|

cc7 |

Volume | mix-level |

cc11 |

Volume | expression |

cc10 |

Pan | |

0xE0 |

Bend/Tuning | pitch-wheel 14 bits |

cc1 |

Bend | mod-wheel 7 bits |

cc76-78 |

Vibrato | Rate, Depth, Delay |

cc11 |

Expression | performance controller |

cc74 |

Brightness | |

0xA0 |

Pressure | polyphonic aftertouch |

0xD0 |

Pressure | channel aftertouch |

Build / Development notes

Songbook is described by songbook.ne and lexer.js, a formal grammar/lexer

in the dialect of Nearley.js and

moo. nearleyc is used to produce

grammar.js and then combined with the nearley parsing run-time to

convert songbooks into its AST.

This is intepretted by the songbook runtime encapsulated by the

class Songbook exposed to Hz's sandbox scripting environment.

To modify the parser, make changes to either the lexer or the grammar (.ne).

Next, run npm run genParser to produce a new version of grammar.js.

You can test the results in songbook/parser/tests with node genAst file.hz.

With changes to the AST may come the requirement to modify the runtime which

is mostly found in runtime/eventblock.mjs. Note that unlike most of Hz's

codebase these files have the .mjs extension. This makes it easier for

them to cohabitate a browser-like and node environment.

See also

strudel | tonal | abc | abcmidi | impro-visor